My last article focused on the high-level definition of Disaster Avoidance and Disaster Recovery. Both are essential terms for Data Center and Compute Infrastructure Architecture.

Working in the manufacturing sector, I often hear we need an infrastructure to support our manufacturing workloads where there is no room for application downtime. Any reasonable person knows that no downtime whatsoever is improbable. However, we can take significant steps to avoid potential downtime. In this article, I will not provide any answers, more trying to come up with a complete list of all you have to consider designing a solution like this.

Areas that can give an application downtime are:

- Electricity – both building power and UPS runtime

- Internet Connectivity

- Network

- Data Center outage

- Fire

- Flooding

- Powerloss

- Network down

- Etc

- Hardware failure

- Patching

- Application failure

There are probably many other reasons for application outages I could have mentioned, but I am sure you get the point. There are many reasons why an application could go down. Some of these you can easily control, while others are harder. What you as an Architect of a solution like this needs to understand is the more resilience you build into the solution, the more complex and expensive the solution will become.

Starting with Data Center or location. Will you use one or many Data Centers for your infrastructure? Are these your buildings, or are they co-locations? Do you use Hyper Scalers (Public Cloud)? Can you control which electricity and natural gas vendors you choose? Can you have several in the same data center? Do they have different routes into the DC? If they all go through a hub on the way into your DC this becomes a single point of failure for your solution. You may not be able to control it, but you should be aware of it to plan for it.

The same goes for internet or network connectivity in the building the DC is located in. If you use more than one DC, what kind of connectivity do you have between the buildings? Does the Fiber go in separate runs? Again if they don’t this could be a single point that brings down the applications, or denies you the resilience you else designed for.

Within the DC, there are considerations like UPS. Are they redundant? Do they have the power and runtime to keep the infrastructure running in case of power outages? Does the network have redundancy? Does the facility need more than one network infrastructure? When it comes to hardware, does all hardware have at least two power supplies, and are these correctly cabled – so you can handle the loss of half the power in the DC without losing services?

As I stated initially, does the hardware utilize more than one DC, and how many? What is the strategy? Will there be shared storage, not shared storage, and will compute be stretched between the DCs? What about Network, L2, or L3? If storage is stretched, what is the synchronization of the storage? Does the Networking infrastructure here support the design? Which protocol does the storage use? Is it FC, is FCoE, or is it IP? What kind of storage infrastructure is used?

What kind of Compute platform are you using? Is it HCI, CI, or separate Compute, Storage, and network? Which CPU architecture, how many CPUs per Compute node? How much RAM? Do you want high-density nodes or less-dense nodes? If you have less CPU and RAM, you will have to have more nodes in your DC. This impacts the consequence to the virtual machines when a node goes down; if a high CPU, high RAM node goes down, it affects more VMs.

Which Hypervisor do you want to run? VMware vSphere, Microsoft Hyper-V, Red Hat Virtualization, OpenStack, etc. They all have advantages and challenges; one may be better for you. What are the workloads, VMs Containers, or both? How is the application architecture stateless, stateful, load balanced, tiered, etc.?

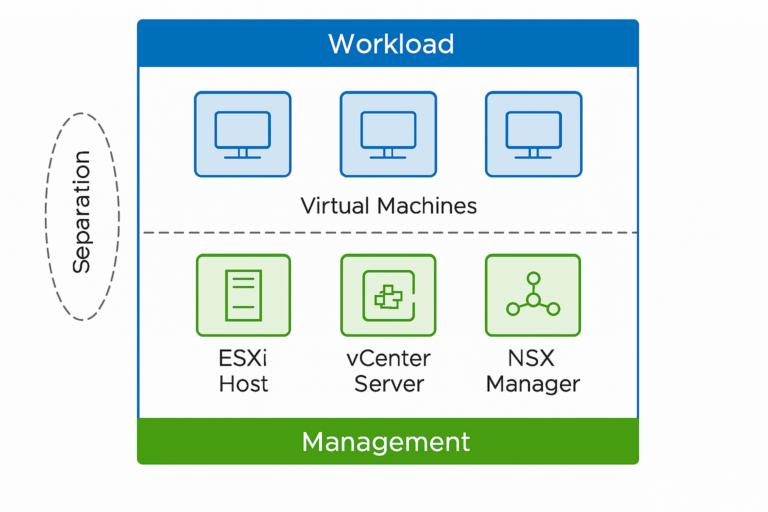

Say we choose VMware. Will we use FT on any VMs? Will we support auto-scaling of the application? Do we need a container orchestration platform? Will we create separate vSphere Clusters based on workloads? Are there license considerations for clustering? How many NICs does each ESXi have? Do we use lockdown mode? Do we integrate with Active Directory? How will we deploy vCenter?

In coming articles, I will look into how the architecture of a VMware setup could support a use case where downtime is not accepted, or in our IT terms the uptime requirements are four 9’s or higher.