I am intending to start a series of performance related articles, that focus on topics any VMware Administrator should be aware of to ensure optimal performance on their platform.

In VMware we (often) over provision resources. We take the assumption that the virtual machines (VMs) running on the platform won’t consume max of their assigned resources at the same time. So we create a setup where we lend resources to be able to run more workloads on the platform. VMware has released a detailed performance best practice guide.

A discussion I often have with Application owners on my VMware platforms is number of vCPUs (virtual CPUs). A higher number of CPUs should make the VM faster, right? This is probably true, but only to some extent.

CPU READY

CPU Ready Time – The time your VM is waiting in line to use the CPU on the host. CPU Ready can also be called the CPU Scheduler time, high time bad – low time good.

If VM A has to cores, VM B has 8 and the host has 1 socket with 8 cores. VM A can access the CPU at any time there is 2 cores free, VM B has to wait until all the cores are free. This will give the VM B a higher CPU ready time.

THe CPU Ready time is time where the application and process of the VM is not performing its intended task and idles. If this idle time is to high it will impact system performance.

Symptoms

- Services running in guest virtual machines respond slowly.

- Applications running in the guest virtual machines respond intermittently.

- The guest virtual machine may seem slow or unresponsive.

The symptoms are general and there are other aspects that can be the reason for these symptoms.

- Memory overcommitment

- Storage latency

- Network latency

- Other CPU Constraints

What is normal

While it is easy to look at CPU usage and understand that you are using 25% of 100% total capacity, it is a little more difficult to understand what is normal versus bad when looking at CPU Ready.

For starters, CPU Ready is measured in the vSphere Client is measure in milliseconds (ms). This needs to be reconciled with VMware’s best practice guidelines that indicate it is best to keep your VMs below 5% CPU Ready per vCPU. When trying to make this reconciliation, it’s important to note that vSphere performance graphs are based upon 20-second data points.

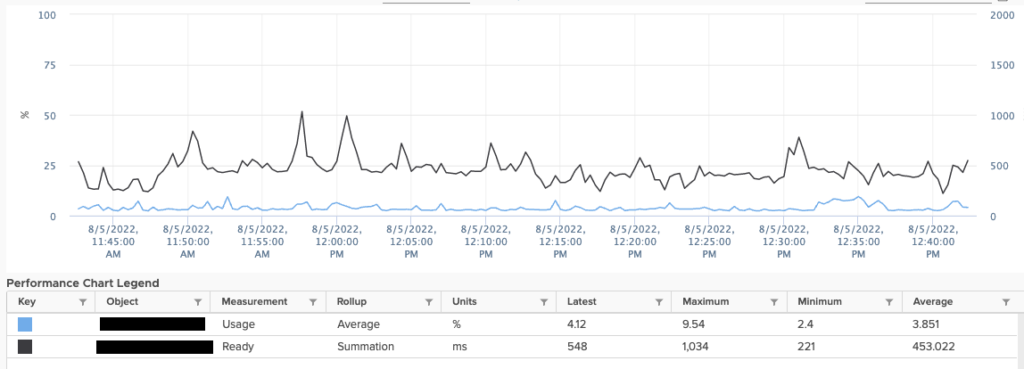

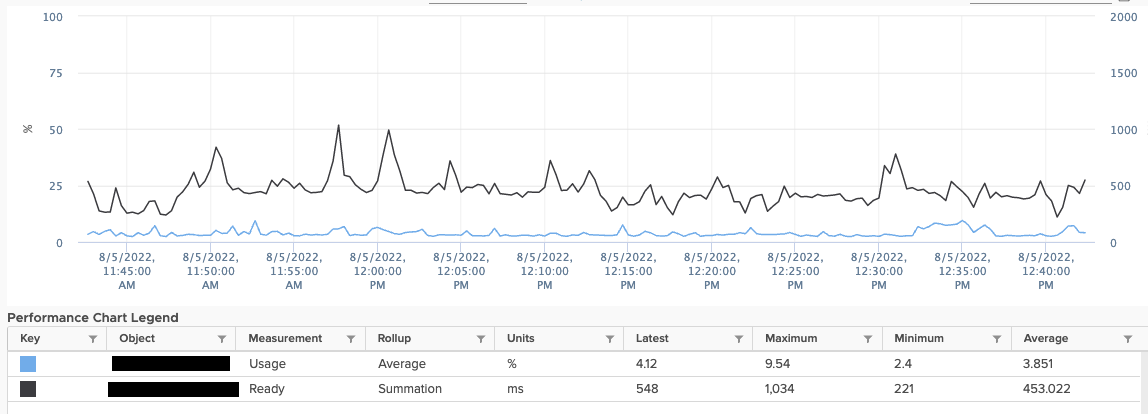

Looking at the figure above, to convert this to a percentage value, we look at the average number 453 ms and divide it by 20 seconds (20000 ms) to arrive at a CPU Ready value as a %. In the case

of this graph, 453/20000 = 0.02265, or 2.26%, which is half of the VMware recommended 5% guideline.

There are a couple of other considerations to be aware of when measuring CPU Ready.

- There will always be some % of ready time. No virtual CPU has 100% access to the physical CPU as the scheduler takes vCPUs on and off the pCPU. In fact, every 25ms the ESXi CPU scheduler evaluates whether or not a vCPU should be on or off a CPU core.

- What is considered a problematic CPU Ready value will differ from system to system. Some applications are less tolerant to higher CPU Ready than others.

Misconceptions of CPU Ready

There are a couple of common CPU Ready misconceptions. The first is hyperthreading and how it impacts performance. The ESXi scheduler considers a CPU with hyperthreading enabled as a full core. However, the hyperthreaded core doesn’t offer 100% performance capacity. It would not be accurate to consider a single CPU with hyperthreading as providing the performance of two full CPU cores. In other words, with hyperthreaded CPUs only offering 75% the performance of a real CPU core and it is important to be very conservative when calculating the CPU oversubscription on hosts with CPU hyperthreading in use.

Second, a large amount of available CPU MHz and GHz on an ESXi host does not necessarily mean that you are operating without CPU Ready issues. Active usage doesn’t cover how many cores are being used by virtual machines at any given time or preventing other VMs from being able to do so. Likewise, a VM’s CPU usage and CPU Ready metrics are not directly correlated. A VM can have a serious issue with CPU Ready when its usage doesn’t appear to be that high. In order to have a full picture of your CPU performance, you need to look at both CPU Ready and CPU usage.

A common question about CPU Ready is whether or not it can be relieved by using VMware’s Distributed Resource Scheduler (DRS). The short answer is “no,” DRS won’t help with CPU Ready issues. The reason for this is that DRS does not take CPU scheduling into account. DRS just monitors CPU and memory usage when determining where to place virtual machines and does not measure pCPU contention between allocated vCPUs.

How to resolve CPU Ready

Proper sizing of virtual machines and hosts – in the first paragraph I mentioned the discussion if more virtual CPU’s gives better performance. Ensure that that the VMs are reasonable sized for the physical CPUs the ESXi host provides.

Ensure that the CPU overcommitment within the vSphere Cluster is reasonable. If you have done the step above and still experience CPU ready to be high, add more ESXi hosts to the cluster to bring the amount of CPU sockets available for the VMs up.

Be careful with tooling like CPU limit and CPU affinity rules.

Conclusion

When looking at a vSphere Cluster in vCenter it can look like the performance is great. There is plenty of free CPU in the cluster, still some VMs may experience performance issues. CPU Ready can be one of these factors that create performance issues. It is important to resolve the CPU Ready times, when the cluster gets more packed the CPU Ready time will only become worse.

Hi,

I’m not sure why this is still believed:

If VM A has to cores, VM B has 8 and the host has 1 socket with 8 cores. VM A can access the CPU at any time there is 2 cores free, VM B has to wait until all the cores are free. This will give the VM B a higher CPU ready time.

This is not true anymore. The cpu scheduling has changed a lot since ESXi 4.1.

https://www.vmware.com/files/pdf/techpaper/VMware-vSphere-CPU-Sched-Perf.pdf

Pages 8 and 9

By not requiring multiple vCPUs to be scheduled together, co-scheduling wide multiprocessor virtual machines becomes efficient.