When i wrote the article on CPU Ready I touched upon the vSphere CPU Scheduler in relation to DRS and how it would not be CPU Ready aware.

I mentioned that the vSphere CPU scheduler is the process within vSphere that decides which VMs vCPU should be scheduled to access the pCPU when. In this article I want to dive deeper into what the vSphere CPU Scheduler is and what the different modes we can run it in entails.

There are three schedulers to choose from in ESXi 6.7 Update 2: the default, SCAv1, and SCAv2.

The vSphere CPU Scheduler determines which virtual Machine gets to use the processors. It tries to maximize utilisation of the processors while also trying to be “fair” to all the workloads.

In the last years we have had several CPU Vulnerabilities, they have impacted all systems regardless if you are active at patching or not. They allow the attacker to bypass all levels of security measures within a system.

The two CPU Schedulers that are SCA – Side Channel Aware present solutions to the latest Intel CPU bugs like L1TF and MDS. They increase the security posture of the VMware environment. Let us look closer on what they entail, and which impact it will have for you to choose either of them.

What is CPU Scheduler?

The role of the CPU scheduler is to assign execution contexts to physical CPU to ensure VM Processing throughput, usage, and responsiveness.

On a Operating System, like WIndows, the execution Context is represented by a process. On a VMware Hypervisor the execution context corresponds to a Global Context Value.

A VM Running on ESXi is a multitude of Global Values:

- One Global Value per vCPU

- One Global Value per SVGA device

- One Global Value per keyboard

- One Global Value per Screen

VMKernel is a non Virtual Machine Global Value that is represented on the ESXi host as well. VMKernel performs system tasks on the ESXi, one of the more known is vMotion.

CPU Scheduler is responsible for choosing which Global Context is scheduled to a Physical Processor.

- CPU Scheduler uses algorithms such as Proportional-Share based Algorithm and Relaxed Co-scheduling for scheduling.

- Relaxed Co-scheduling is used for multi-vCPU (2 or more vCPU) VMs. These multi vCPU VMs are also known as SMP or Symmetric Multi Processing VMs.

- Every 2-30 milliseconds, CPU scheduler checks physical CPU usage and migrates vCPUs from one core to another or one socket to another if necessary.

- Default time slice is of 50 milliseconds which means CPU scheduler allows a vCPU to run on pCPU for max 50 ms.

- CPU Scheduler is fully aware of NUMA architecture. Hence NUMA, Wide NUMA and vNUMA are taken into consideration while scheduling.

CPU Vulnerabilities

In January of 2018 it became public that CPU data caching timing could be abused to efficiently leak information out mis-speculated CPU execution, leading to (at worst) abbitary virtual memory read vulnerabilities across local security boundaries in various contexts.

- Variant 1: bounds check bypass (CVE-2017-5753 and CVE-2018-3693) – a.k.a. Spectre

- Variant 2: branch target injection (CVE-2017-5715) – a.k.a. Spectre

- Variant 3: rogue data cache load (CVE-2017-5754) – a.k.a. Meltdown

VMware released VMSA-2018-0002 and KB-52245 on this topic.

The Intel CPU vulnerability L1TF (L1 Terminal Fault) was published in August 2018. VMware released VMSA-2018-0020 with workarounds for the vulnerability

- Sequential-context attack vector: a malicious VM can potentially infer recently accessed L1 data of a previous context (hypervisor thread or other VM thread) on either logical processor of a processor core.

- Concurrent-context attack vector: a malicious VM can potentially infer recently accessed L1 data of a concurrently executing context (hypervisor thread or other VM thread) on the other logical processor of the Hyper-Threading enabled processor core

In May 2019 Intel released data on a new group of CPU vulnerabilities called Microarchitectural Data Sampling (MDS). The attacks using this methodology are known as Fallout, RIDL, ZombieLoad and ZombieLoad 2. VMware VMSA-2019-0008 addresses the mitigation of MDS attacks in VMware vSphere Software

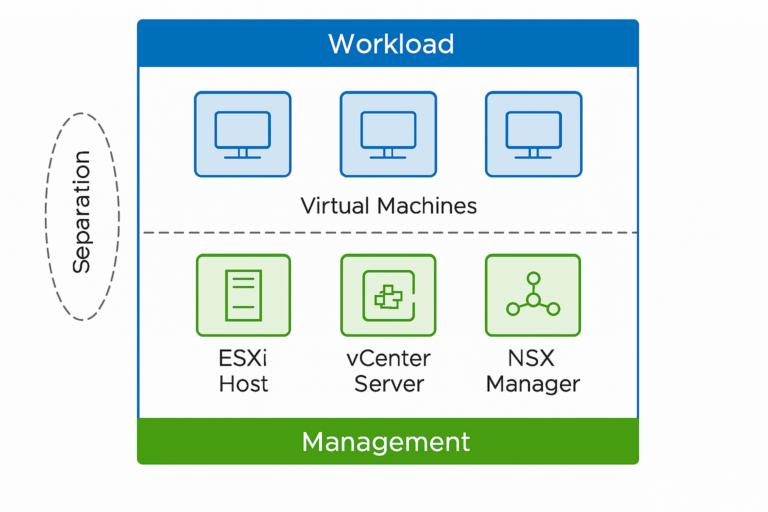

Host Security Boundary – Default Scheduler

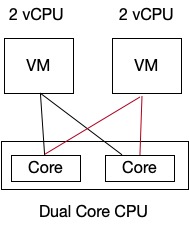

With this mode vCPU’s are scheduled across the physical cores of the CPU. Multiple vCPUs of a VM are not scheduled on the same physical core. From the image below we can see that four VMs with 2 vCPU each running on an ESXi host that has a processor with 2 cores without Hyperthreading will be scheduled.

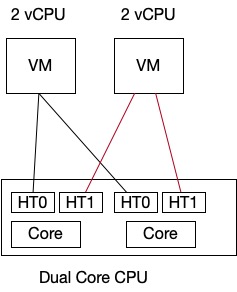

If there was Hyperthreading enabled on the CPU it would look like this

The Default CPU Scheduling policy retains full performance and it is used when advanced settings hyperthreadingMitigation = FALSE and HyperthreadingMitigationIntraVM = N/A.

The default scheduler has no inherent security awareness. The host security boundary allows information leakage between all VMs and the hypervisor running on a given host, it prevents leakage between hosts. VMs on the host must all be considered part of the same information security boundary as each other and the hypervisor.

Side-Channel Aware Scheduler v1 (SCAv1)

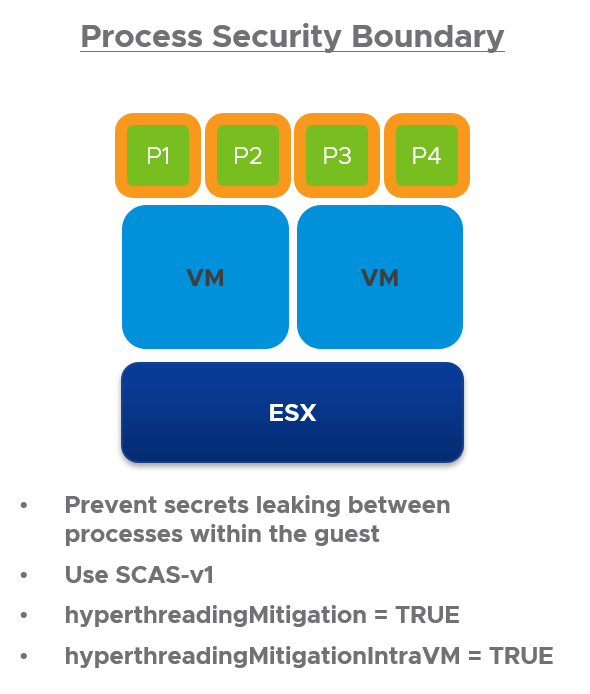

SCAv1 implements per-process protections which assist with L1TF and MDS and is the most secure but can be the slowest. The process security boundary ensures that concurrent-context attacks using speculative side channels do not expose information across different processes or security contexts within the guest.

Enabling this mode is done by

- hyperthreadingMitigation = TRUE

- hyperthreadingMitigationIntraVM = TRUE

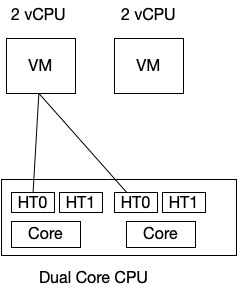

SCAv1 impacts scheduling this way. We continue to assume we have a ESXi with a dual core CPU, we have two VMs running 2 vCPU. One of the VMs will be scheduled first and after time slicing the other VM will start execution.

We see that one thread of the core is used and the other one is idle. HT1 is not even used if a second VM has demand and is waiting to get scheduled. So once the first VM time slice occurs, second VM will get scheduled as shown below. HT1 thead is in idle state again.

With this CPU Scheduler Model two Global Contexts of the same VM has no access to each others information. One thread is always idle which results in reduced performance.

Side-Channel Aware Scheduler v2 (SCAv2)

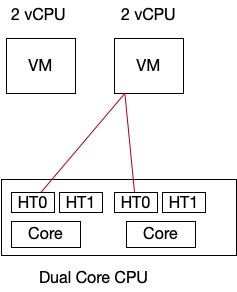

The VM security boundary prevents information leaking between two different VMs on a host or between a VM and the hypervisor.

SCVAv2 is enabled by setting

- hyperthreadingMitigation=TRUE

- hyperthreadingMitigationIntraVM=FALSE

This option provides a balance of performance and security for environments where the VM is considered the information security boundary.

This mode provides a balance of performance and security for environments. Unlike SCAv1 SCAv2 uses both the thread of the CPU. Also, unlike default mode SCAv2 allows usage of both CPU threads by the Global Contexts of the same VM.

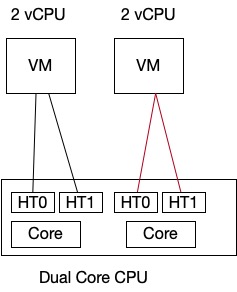

If we look at our previous example of the 2 VMs running 2 vCPU on a hyper threaded dual core CPU it would look like this.

As you can see, both the threads are being used. It is ensured that both threads are used by the same VM. CPU threads are not shared between VMs due to security boundary model.

How do I choose?

The sad truth is if you choose to go most secure you will suffer up to 30% loss on CPU performance in your Clusters. If you have no security you will have higher performance, but the later CPU Exploits have shown how and attacker can get information from other VMs running on the infrastructure.

“Does any VM have multiple users or processes where you do not want to leak secrets between those users or processes? This includes VBS, containers, terminal servers, etc.”

One key consideration when deciding which scheduler to use is whether all the processes inside a virtual machine have the same security needs and risk. Because L1TF and MDS allow secrets to leak between processes, if those processes belong to different users, different customers, or different applications then they all become part of the same security scope. This level of risk may be a problem and one that only you can make a risk management decision for.

“Do you have CPU capacity headroom in the cluster?”

The performance impacts of these remediations affect every workload differently. That said, SCAv1 worst case performance loss is generally regarded as 30%, and SCAv2 is generally 10%. If you don’t have extra CPU headroom in your cluster you may have issues. Assuming that’s the case, and you cannot tolerate slower workloads, there are options to consider.

First, while you can enable different schedulers on different hosts, it isn’t a good idea to do so. Always enable the same scheduler on all hosts in a cluster. Having differing schedulers is a recipe for confusion, errors, and security & performance problems. That said, a valid approach might be to sequester workloads that need the per-process protections of SCAv1 on a separate cluster and run SCAv2 on other clusters that are compatible with the workloads’ risk.

Second, enabling SCAv2 gets you some protections, though not the full protections of SCAv1. If you need SCAv1 you should aim for SCAv1, but SCAv2 may be a temporary solution.

Summary

vSphere 6.7 U2 and later will use the default scheduler unless it is configured under advanced settings to use either SCAv1 or SCAv2 with the flags described under each CPU Scheduler model. For the SCAv1 or SCAv2 to go into effect the host (ESXi) must be rebooted.

The Advanced settings can either be set via the GUI or via ESXcli – like this (SCA v1):

- esxcli system settings kernel set -s hyperthreadingMitigation -v TRUE

- esxcli system settings kernel set -s hyperthreadingMitigationIntraVM -v TRUE