This is part 1.

Imagine a scenario where you deploy an application environment, and when you face a challenge with your environment or decide to upgrade a software component, you destroy it and redeploy it.

I catch myself way too often in a world where large amounts of hours are spent troubleshooting issues.

The concepts of deployment and redeployment are commonly used in the public cloud; we can adopt the same strategy on-premises using vSphere as our “cloud,” since VMware technologies are highly programmable.

Some fundamental concepts need to be in place for this to work. It may not be a great idea for any application.

Cattle vs Pet

The “cattle vs. pets” analogy describes different approaches to managing servers and infrastructure in cloud computing and IT management.

Cattle:

- Concept: Servers are treated like cattle, meaning they are interchangeable, not unique, and can be easily replaced.

- Management: If a server fails, it is terminated and replaced with another one.

- Benefits: Increased reliability, scalability, and ease of management due to automation.

Pets:

- Concept: Servers are treated like pets, meaning they are unique, individually managed, and cared for.

- Management: If a server has a problem, it is individually diagnosed and fixed rather than replaced.

- Benefits: Allows for detailed customization and management of individual servers.

Stateless

Another requirement is that the application is that the application is stateless. A stateless application is an application that does not retain any session information or data about its users between different requests.

Stateless applications are commonly used in cloud-native applications, where scalability and resilience are crucial. They often contrast with stateful applications, which maintain session information and require more complex infrastructure to manage state consistency across multiple servers.

In this example, we are considering an application architecture where a single PostgreSQL database hosts the application’s data. If this application were running in AWS, we would have utilized RDS. However, since we are operating in vSphere, that option is unavailable.

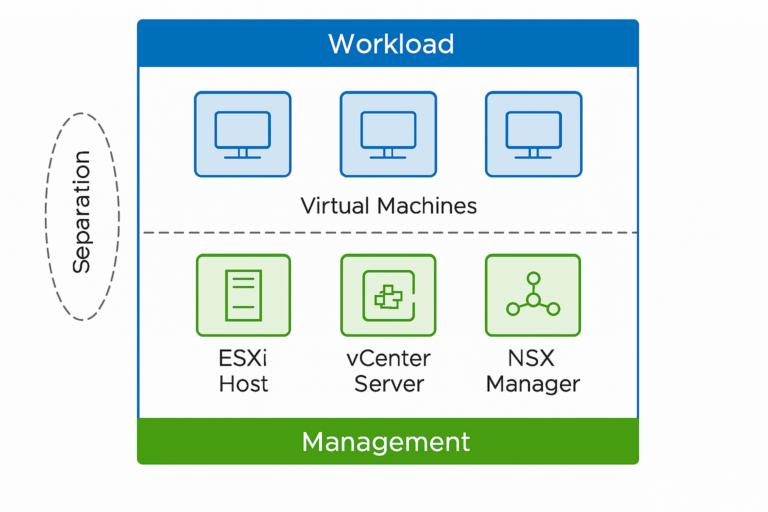

Architecture

The concept here is to deploy an application environment. This environment’s uptime is monitored with Nagios (a monitoring tool). If a VM is reported down, the VM should be redeployed. Since IaC tools work best with Linux, I am showing this using RHEL. I am sure that Windows can be automated, too.

This application architecture includes a single frontend web server that acts as both the listener and the load balancer for the application servers. In this case, there are three application servers and one backend database server. For simplicity, I am deploying using a pre-existing RHEL template.

+------------------+

| Web Server |

| (Load Balancer) |

+------------------+

|

|

+--------------------+

V V

+------------------+ +------------------+ +------------------+

| Application | | Application | | Application |

| Server 1 | | Server 2 | | Server 3 |

+------------------+ +------------------+ +------------------+

| | |

| | |

+--------------------+--------------------+

|

|

+------------------+

| Database Server |

+------------------+I have decided to work with Ansible on this. Perhaps I will write another article about it in Terraform.

We have:

- 1 db server running PostgreSQL

- 3 App servers running JBOSS

- 1 Web server running Apache

To keep things simple, all servers will be equipped with 4 CPUs, 16GB of RAM, and a 250GB hard drive.

Additionally, we have a Nagios Server for monitoring and one server for the Ansible Controller. The code will provide a high-level example. A functioning enterprise environment requires more features, configuration, and complexity.

Ansible code

We are using Ansible Roles and Vault.

‘deploy_environment.yml’

---

- name: Deploy vSphere Environment

hosts: localhost

gather_facts: no

vars_files:

- "vault.yml" # Contains encrypted credentials

tasks:

- name: Deploy VMs

include_role:

name: deploy_vms

vars:

vm_names:

- server001

- server002

- server003

- server004

- server005

ip_range: "192.168.100.0/24"

vm_template: "RHEL9"

cpu: 4

memory: 16384 # 16GB in MB

disk_size: 250 # GB

- name: Configure Servers

hosts: all

tasks:

- name: Install Apache on server001

include_role:

name: apache

when: inventory_hostname == 'server001'

- name: Install JBoss on server002-004

include_role:

name: jboss

when: inventory_hostname in ['server002', 'server003', 'server004']

- name: Install PostgreSQL on server005

include_role:

name: postgresql

when: inventory_hostname == 'server005'Create a role named “deploy_vms’ with the following structure:

tasks/main.yml

---

- name: Create VMs on vSphere

community.vmware.vmware_guest:

hostname: "{{ vcenter_hostname }}"

username: "{{ vcenter_username }}"

password: "{{ vcenter_password }}"

validate_certs: no

datacenter: "{{ datacenter_name }}"

cluster: "{{ cluster_name }}"

name: "{{ item }}"

template: "{{ vm_template }}"

state: poweredon

hardware:

memory_mb: "{{ memory }}"

num_cpus: "{{ cpu }}"

disk:

- size_gb: "{{ disk_size }}"

networks:

- name: "{{ network_name }}"

ip: "{{ lookup('community.general.ip', ip_range, index=index) }}"

wait_for_ip_address: yes

loop: "{{ vm_names }}"

loop_control:

index_var: index

register: vm_deployment

ignore_errors: yes

- name: Log VM deployment results

copy:

content: "{{ vm_deployment | to_nice_json }}"

dest: "/tmp/vm_deployment.log"

- name: Ensure VMs are updated

block:

- name: Update all packages

ansible.builtin.yum:

name: '*'

state: latest

rescue:

- name: Log update failure

ansible.builtin.copy:

content: "Update failed for {{ item }}"

dest: "/tmp/update_failure.log"

loop: "{{ vm_names }}"vars/main.yml

---

vcenter_hostname: "vcenter01.test.local"

vcenter_username: !vault |

$ANSIBLE_VAULT;1.1;AES256

your_encrypted_username

vcenter_password: !vault |

$ANSIBLE_VAULT;1.1;AES256

your_encrypted_password

datacenter_name: "your_datacenter"

cluster_name: "your_cluster"

network_name: "your_network"Apache

---

- name: Install Apache

ansible.builtin.yum:

name: httpd

state: present

- name: Start and enable Apache

ansible.builtin.service:

name: httpd

state: started

enabled: true

- name: Log Apache installation

ansible.builtin.copy:

content: "Apache installed on {{ inventory_hostname }}"

dest: "/tmp/apache_install.log"JBoss

---

- name: Install JBoss

ansible.builtin.yum:

name: jboss-eap7

state: present

- name: Start and enable JBoss

ansible.builtin.service:

name: jboss-eap7

state: started

enabled: true

- name: Log JBoss installation

ansible.builtin.copy:

content: "JBoss installed on {{ inventory_hostname }}"

dest: "/tmp/jboss_install.log"PostgreSQL

---

- name: Install PostgreSQL

ansible.builtin.yum:

name: postgresql-server

state: present

- name: Initialize PostgreSQL database

ansible.builtin.command: "/usr/pgsql-14/bin/postgresql-14-setup initdb"

args:

creates: /var/lib/pgsql/14/data/pg_hba.conf

- name: Start and enable PostgreSQL

ansible.builtin.service:

name: postgresql

state: started

enabled: true

- name: Log PostgreSQL installation

ansible.builtin.copy:

content: "PostgreSQL installed on {{ inventory_hostname }}"

dest: "/tmp/postgresql_install.log"Nagios monitoring

Install NRPE plugin and allow SSH access between Nagios Server and our five VMs.

---

- name: Install NRPE and configure SSH keys

hosts: server001, server002, server003, server004, server005

become: yes

vars:

nagios_server_user: "nagios"

nagios_server_ip: "192.168.100.10" # Replace with actual Nagios server IP

ssh_key_file: "/home/{{ nagios_server_user }}/.ssh/id_rsa.pub"

tasks:

- name: Install NRPE and Nagios plugins

ansible.builtin.yum:

name:

- nrpe

- nagios-plugins-all

state: present

- name: Allow Nagios server in NRPE configuration

ansible.builtin.lineinfile:

path: /etc/nagios/nrpe.cfg

regexp: '<sup class="text-blue-900 border border-black rounded px-1 mx-1 font-medium bg-gray-100 text-[11px]"></sup>

line: 'allowed_hosts=127.0.0.1,{{ nagios_server_ip }}'

- name: Restart NRPE service

ansible.builtin.service:

name: nrpe

state: restarted

- name: Create Nagios user

ansible.builtin.user:

name: "{{ nagios_server_user }}"

state: present

shell: /bin/bash

- name: Add Nagios server SSH key

ansible.builtin.authorized_key:

user: "{{ nagios_server_user }}"

state: present

key: "{{ lookup('file', ssh_key_file) }}"

Setup Nagios Monitoring on the 5 VMs. We will monitor CPU, RAM, Disk, Uptime, and if the VM responds

---

- name: Install and configure Nagios NRPE on VMs

hosts: server001, server002, server003, server004, server005

become: yes

vars:

nagios_server_ip: "192.168.100.10" # Replace with the actual Nagios server IP

tasks:

- name: Install NRPE and Nagios plugins

ansible.builtin.yum:

name:

- nagios-nrpe-server

- nagios-plugins-all

state: present

- name: Configure NRPE to allow Nagios server access

ansible.builtin.lineinfile:

path: /etc/nagios/nrpe.cfg

regexp: '<sup class="text-blue-900 border border-black rounded px-1 mx-1 font-medium bg-gray-100 text-[11px]"></sup>

line: 'allowed_hosts=127.0.0.1,{{ nagios_server_ip }}'

- name: Restart NRPE service

ansible.builtin.service:

name: nrpe

state: restarted

- name: Copy custom check scripts

ansible.builtin.copy:

src: check_memory.sh

dest: /usr/local/nagios/libexec/check_memory.sh

mode: '0755'

- name: Add NRPE commands for monitoring

ansible.builtin.lineinfile:

path: /etc/nagios/nrpe.cfg

line: |

command[check_memory]=/usr/local/nagios/libexec/check_memory.sh -w 80 -c 90

command[check_disk]=/usr/lib64/nagios/plugins/check_disk -w 20% -c 10% -p /

command[check_cpu]=/usr/lib64/nagios/plugins/check_cpu -w 80 -c 90

command[check_uptime]=/usr/lib64/nagios/plugins/check_uptime

- name: Configure Nagios server

hosts: nagios_server

become: yes

tasks:

- name: Add host definitions for monitoring VMs

ansible.builtin.lineinfile:

path: /usr/local/nagios/etc/servers/{{ inventory_hostname }}.cfg

create: yes

line: |

define host {

use linux-server

host_name {{ inventory_hostname }}

address {{ ansible_host }}

check_command check-host-alive

}

- name: Add service definitions for monitoring

ansible.builtin.lineinfile:

path: /usr/local/nagios/etc/servers/{{ inventory_hostname }}_services.cfg

create: yes

line: |

define service {

use generic-service

host_name {{ inventory_hostname }}

service_description CPU Load

check_command check_nrpe!check_cpu

}

define service {

use generic-service

host_name {{ inventory_hostname }}

service_description Memory Usage

check_command check_nrpe!check_memory

}

define service {

use generic-service

host_name {{ inventory_hostname }}

service_description Disk Usage

check_command check_nrpe!check_disk

}

define service {

use generic-service

host_name {{ inventory_hostname }}

service_description Uptime

check_command check_nrpe!check_uptime

}

- name: Restart Nagios service

ansible.builtin.service:

name: nagios

state: restartedCreate a custom script for memory checks and place it in the `files` directory

check_memory.sh

#!/bin/bash

# Example script to check memory usage

FreeM=$(free -m)

memTotal_m=$(echo "$FreeM" | grep Mem | awk '{print $2}')

memUsed_m=$(echo "$FreeM" | grep Mem | awk '{print $3}')

memFree_m=$(echo "$FreeM" | grep Mem | awk '{print $4}')

memUsedPrc=$((($memUsed_m*100)/$memTotal_m))

if [ "$memUsedPrc" -ge "$4" ]; then

echo "Memory: CRITICAL - $memUsedPrc% used"

exit 2

elif [ "$memUsedPrc" -ge "$2" ]; then

echo "Memory: WARNING - $memUsedPrc% used"

exit 1

else

echo "Memory: OK - $memUsedPrc% used"

exit 0

fiDefine monitoring

Define the hosts you are monitoring

define host {

use linux-server

host_name server001

alias Front-end Server

address 192.168.100.101

check_command check-host-alive

max_check_attempts 3

notification_interval 5

notification_period 24x7

notification_options d,u,r

contact_groups admins

}

define service {

use generic-service

host_name server001

service_description VM Status

check_command check_nrpe!check_vm_status

max_check_attempts 3

normal_check_interval 5

retry_check_interval 1

notification_interval 5

notification_period 24x7

notification_options w,u,c,r

contact_groups admins

}On the VM being monitored, the following code should exist. Ensure the NRPE configuration includes a command to check the VM’s status. Add the following to `/etc/nagios/nrpe.cfg`:

command[check_vm_status]=/usr/lib64/nagios/plugins/check_ping -H 127.0.0.1 -w 100.0,20% -c 500.0,60%Define recipients of your Admin group

define contactgroup {

contactgroup_name admins

alias Nagios Administrators

members nagiosadmin

}

define contact {

contact_name nagiosadmin

use generic-contact

alias Nagios Admin

email nagiosadmin@example.com

}If no response trigger…

nagios_event_handler.sh

#!/bin/bash

# Nagios variables

HOSTNAME=$1

HOSTSTATE=$2

# Check if the host is down

if [ "$HOSTSTATE" == "DOWN" ]; then

# Trigger Ansible to redeploy the VM

ansible-playbook /path/to/redeploy_vm.yml --extra-vars "vm_name=$HOSTNAME"

fiMake the code executable

chmod +x /path/to/nagios_event_handler.shConfigure Nagios to Use the Event Handler

define command {

command_name redeploy_vm

command_line /path/to/nagios_event_handler.sh $HOSTNAME$ $HOSTSTATE$

}

define host {

use linux-server

host_name Server001

alias Server001

address 192.168.100.10

max_check_attempts 5

check_period 24x7

notification_interval 30

notification_period 24x7

event_handler redeploy_vm

}Ansible Playbook for Redeployment

redeploy_vm.yml

---

- name: Manage VM Lifecycle

hosts: localhost

vars:

vm_name: "{{ vm_name }}" # Passed as an extra variable

vm_template: "RHEL9"

datacenter_name: "Datacenter"

cluster_name: "Cluster"

network_name: "Network"

cpu: 4

memory: 16384 # 16GB in MB

disk_size: 250 # GB

tasks:

- name: Destroy VM if unresponsive

community.vmware.vmware_guest:

hostname: "{{ vcenter_hostname }}"

username: "{{ vcenter_username }}"

password: "{{ vcenter_password }}"

validate_certs: no

datacenter: "{{ datacenter_name }}"

name: "{{ vm_name }}"

state: absent

when: vm_unresponsive == true

- name: Rebuild VM

community.vmware.vmware_guest:

hostname: "{{ vcenter_hostname }}"

username: "{{ vcenter_username }}"

password: "{{ vcenter_password }}"

validate_certs: no

datacenter: "{{ datacenter_name }}"

cluster: "{{ cluster_name }}"

name: "{{ vm_name }}"

template: "{{ vm_template }}"

state: poweredon

hardware:

memory_mb: "{{ memory }}"

num_cpus: "{{ cpu }}"

disk:

- size_gb: "{{ disk_size }}"

networks:

- name: "{{ network_name }}"

ip: "{{ lookup('community.general.ip', ip_range, index=vm_index) }}"

wait_for_ip_address: yes

when: vm_unresponsive == trueNext Steps

I hope the reader understands that this is highly simplified. These VMs set up here have no purpose; they have no application and do not connect to anything.

To come, screenshots of me running this code in a vSphere environment.